Accelerate Neural Subspace-Based Reduced-Order Solver of Deformable Simulation by Lipschitz Optimization

SIGGRAPH Asia 2024 (ACM Transactions on Graphics)

Abstract

Reduced-order simulation is an emerging method for accelerating physical simulations with high DOFs, and recently developed neural-network-based methods with nonlinear subspaces have been proven effective in diverse applications as more concise subspaces can be detected. However, the complexity and landscape of simulation objectives within the subspace have not been optimized, which leaves room for enhancement of the convergence speed.

This work focuses on this point by proposing a general method for finding optimized subspace mappings, enabling further acceleration of neural reduced-order simulations while capturing comprehensive representations of the configuration manifolds. We achieve this by optimizing the Lipschitz energy of the elasticity term in the simulation objective, and incorporating the cubature approximation into the training process to manage the high memory and time demands associated with optimizing the newly introduced energy. Our method is versatile and applicable to both supervised and unsupervised settings for optimizing the parameterizations of the configuration manifolds. We demonstrate the effectiveness of our approach through general cases in both quasi-static and dynamics simulations.

Our method achieves acceleration factors of up to 6.83 while consistently preserving comparable simulation accuracy in various cases, including large twisting, bending, and rotational deformations with collision handling. This novel approach offers significant potential for accelerating physical simulations, and can be a good add-on to existing neural-network-based solutions in modeling complex deformable objects.

Video

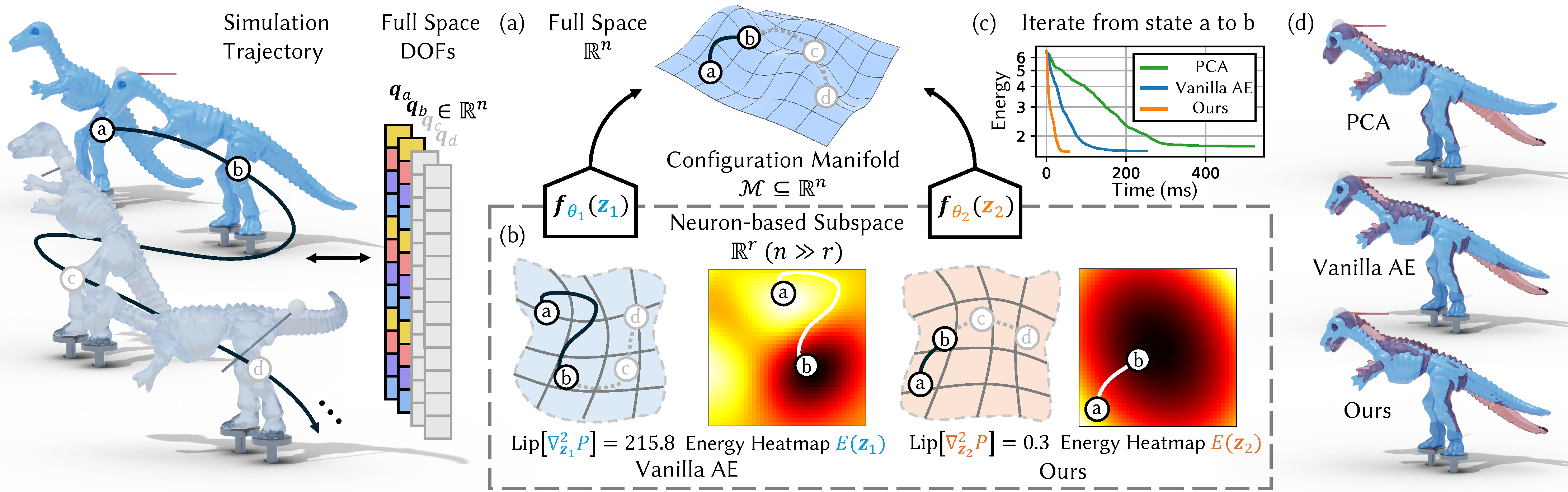

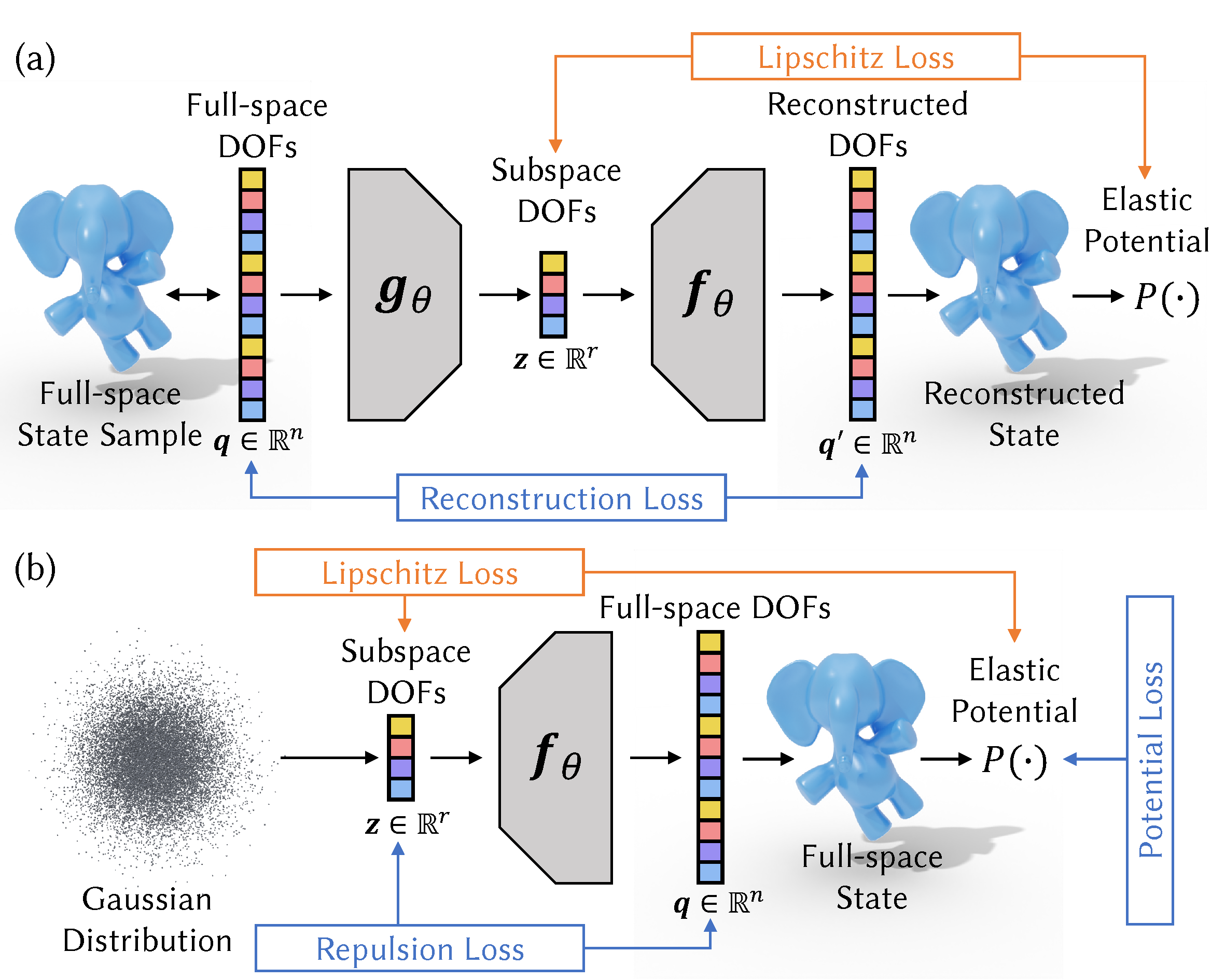

Overview

An overview for our neural subspace construction settings. (a) The supervised setting. (b) The unsupervised setting. Conventional methods only consider the construction losses shown in blue but do not optimize the Lipschitz loss (shown in orange) to control the landscape of the simulation objective in the subspace.

Experiment results

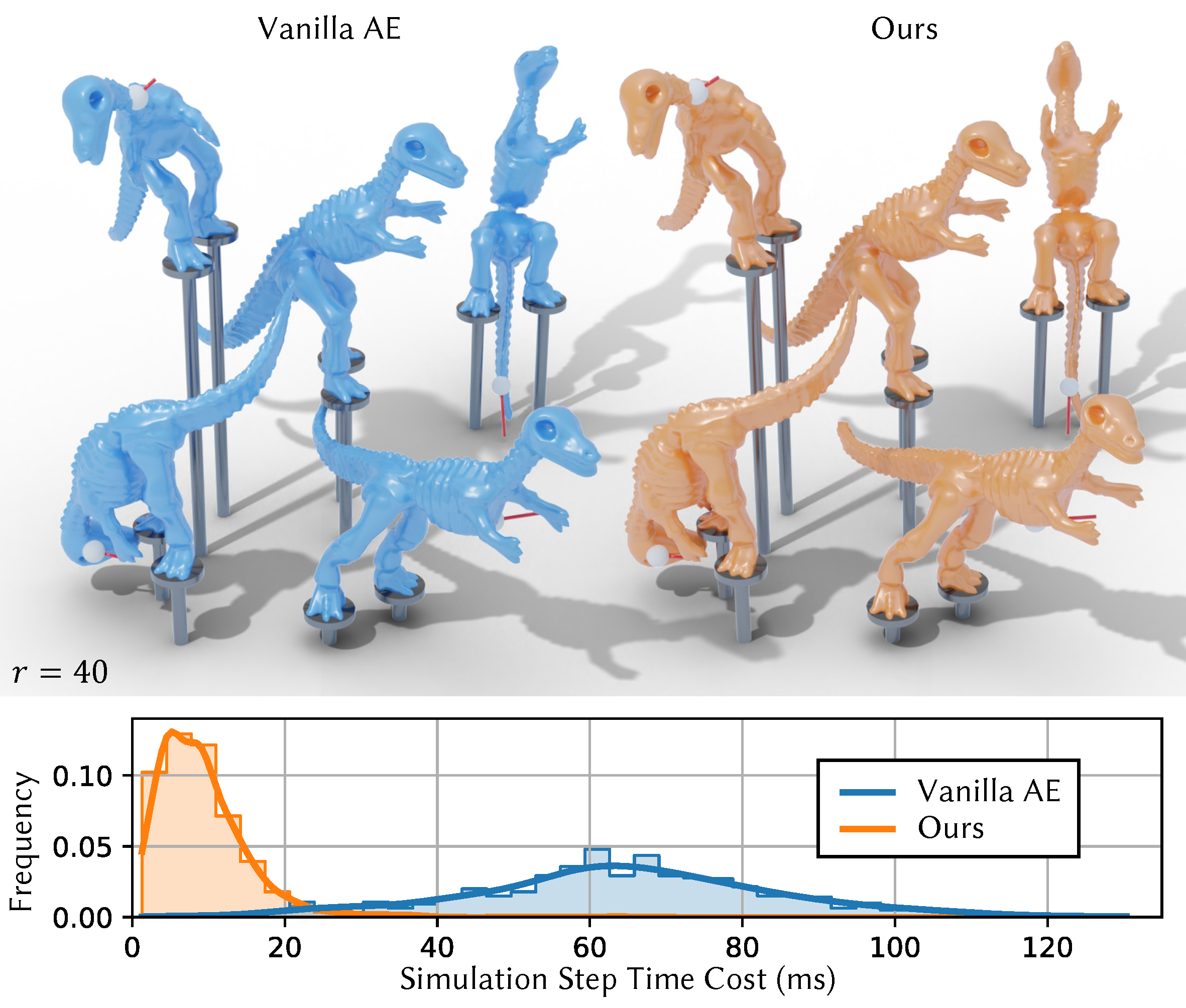

Quantitative Comparison on Simulation Speed

We compare the simulation speed of method and the vanilla subspace construction by collecting the simulation time cost distribution of the two methods. For the dinosaur example that produces complex deformations by applying interactions, our method reaches an acceleration rate of 6.83x.

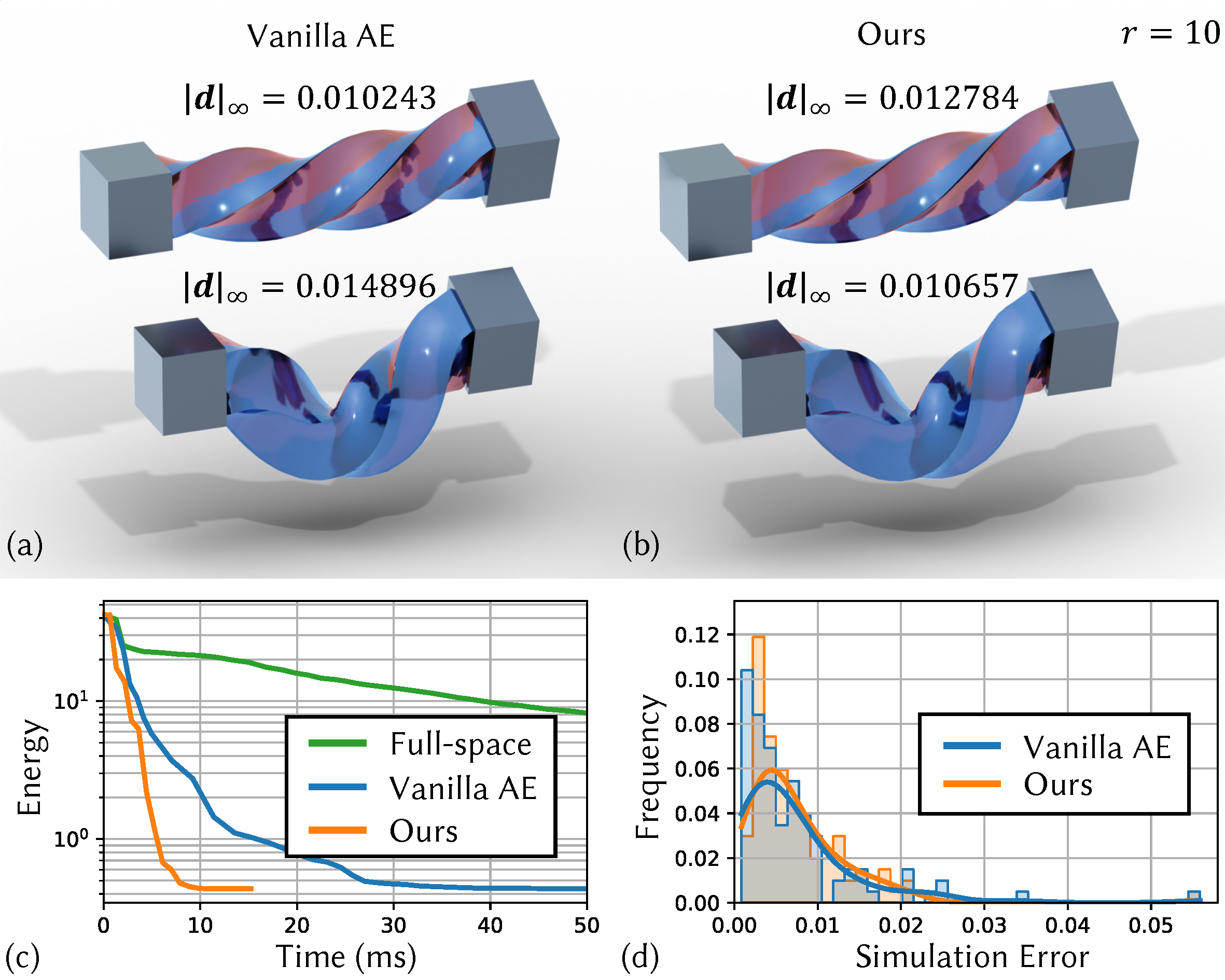

Quantitative Comparison on Simulation Quality

We compare the simulation quality of method and the vanilla subspace construction. (a) Results of vanilla neural subspace. (b) Results of our method with optimized Lipschitz energy. (c) For the shown simulation, the two methods converge to the same level of potential energy while the convergence speed of our method is accelerated by \(\sim\)3 times. (d) Simulation error distribution with full-space simulation as the reference. The solid lines are Kernel Density Estimation (KDE) plots that visualize the estimated probability density curves of the simulation error. The KDE of our method is analogous to that of the vanilla neural subspace construction, showing a comparable simulation quality of our method.

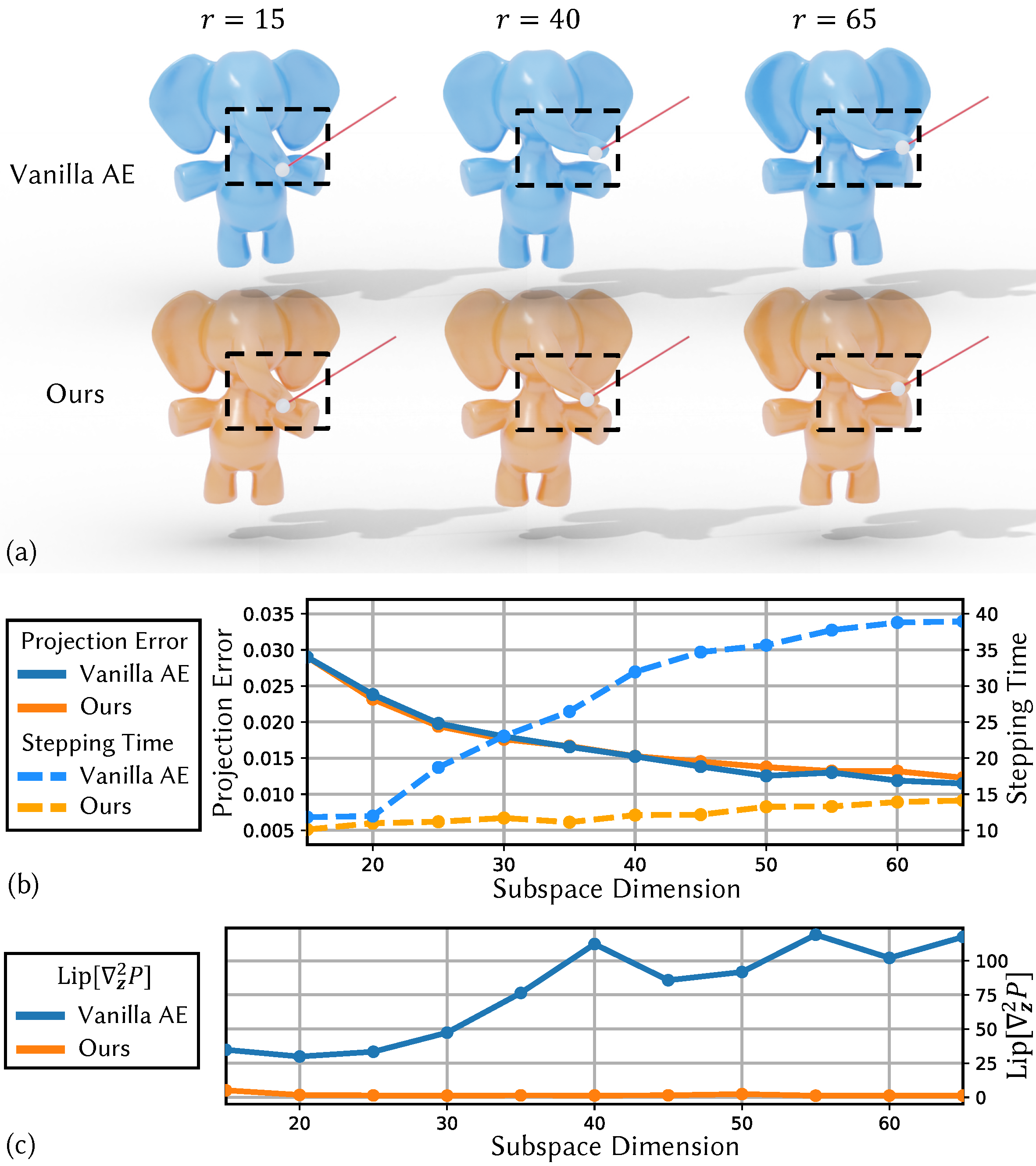

Influence of Subspace Dimension on Simulation Quality and Speed

We construct subspaces of different dimensions for the Elephant example and compare their simulation quality and speed. (a) Qualitative comparison on simulation quality by applying a fixed interaction to the trunk. (b) Qualitative comparison on simulation quality and speed by mean error of full-space simulation states projections and mean simulation step time cost. (c) Qualitative comparison on the Lipschitz constants of the subspace Hessian. With the increase in the subspace dimension, our method demonstrates greater acceleration compared to the vanilla method because our simulation time remains small, while the vanilla method experiences a significant increase. This illustrates that our method can effectively enhance simulation quality by increasing the subspace dimension with only a small increase in simulation time.

Other experiments

We test the performance of the proposed Lipschitz optimization method in various physical systems and demonstrate that our method can effectively improve the Lipschitz constant of the subspace potential Hessian, resulting in simulation speedups. Furthermore, our method conserves subspace quality, thus achieving similar configuration manifold coverage and comparable simulation quality (please refer to our paper and supplemental video for all results).

BibTeX

@article{lyu2024accelerate,

title={Accelerate Neural Subspace-Based Reduced-Order Solver of Deformable Simulation by Lipschitz Optimization},

author={Lyu, Aoran and Zhao, Shixian and Xian, Chuhua and Cen, Zhihao and Cai, Hongmin and Fang, Guoxin},

journal={ACM Transactions on Graphics (TOG)},

volume={43},

number={6},

pages={1--10},

year={2024},

publisher={ACM New York, NY, USA}

}